Learn more about me and the cool products I have contributed to!

Installing GreenPlum & Python3.7 on Ubuntu Server

A detailed tutorial for installing Greenplum

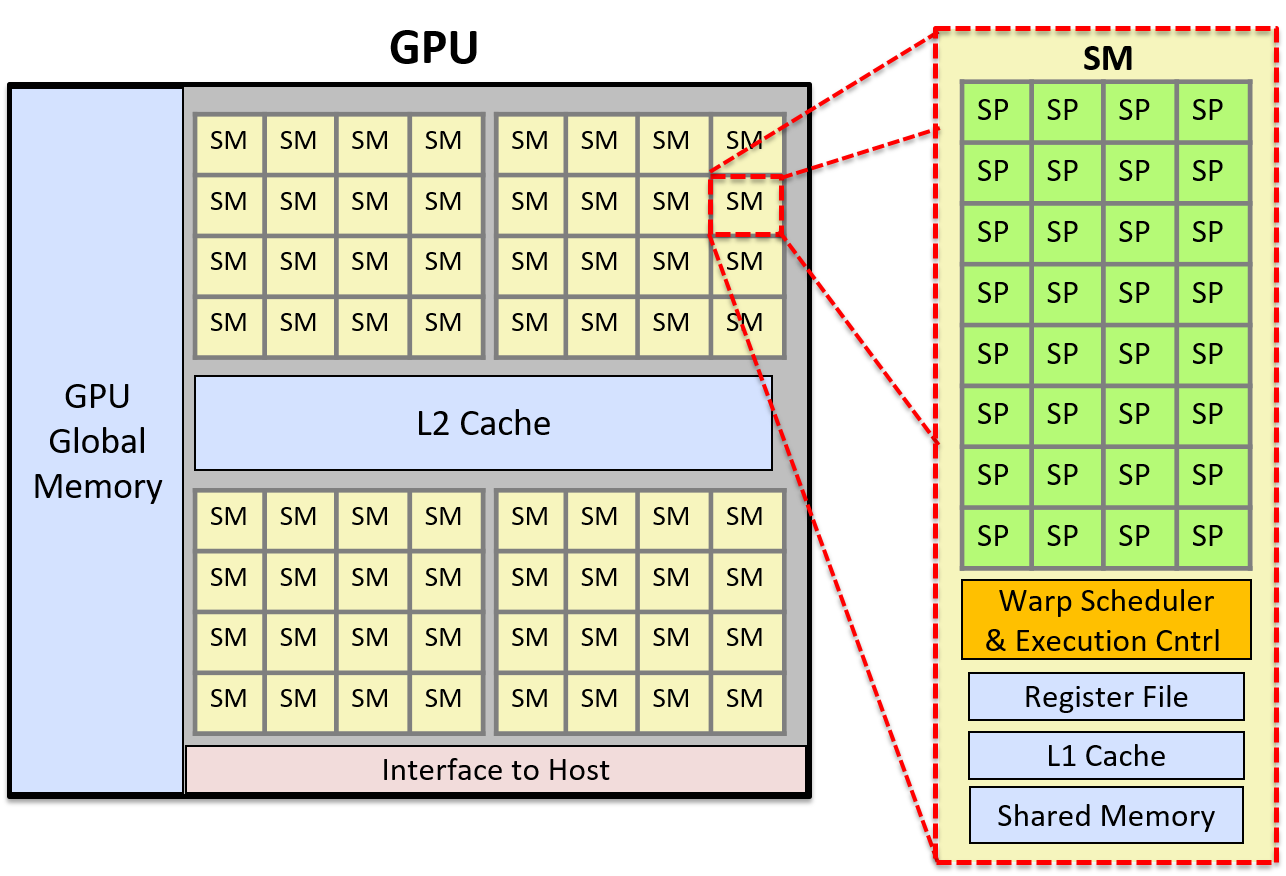

Read moreCUDA编程 2. Elementwise

本篇参考LeetCUDA的实现

Elementwise 操作

Elementwise是一类算子的统称,所谓算子(Operator),就是一个执行特定数学运算的函数单元,可以类比数据库的Operator。

Elementwise类的算子特征就是每一个位置的元素只喝对应位置的元素进行计算,互不干扰,换句话说就是“位置对齐,各算各的”。因此,不难发现我们上一片中定义的vectorAdd核函数就是一个Elementwise算子。

下面让我们用一些例子来透彻理解Elementwise算子

Elementwise Add

首先,对于普通的fp32的elementwise add,和我们上一篇写的vectorAdd是一样的。在此就不赘述了。

这个方法还可以进行优化,如下:

1 | __global__ void elementwise_add_fp32x4(float *a, float *b, float *c, int numElements){ |

关于向量化访存,可以参考这篇文章

GPU Architecture and CUDA Programming

Read moreFrom C# and Python -- A Deep Dive into Language Execution and Typing Models

Starting from the difference between C# and Python...

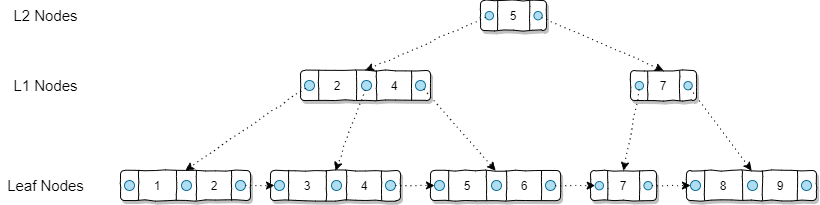

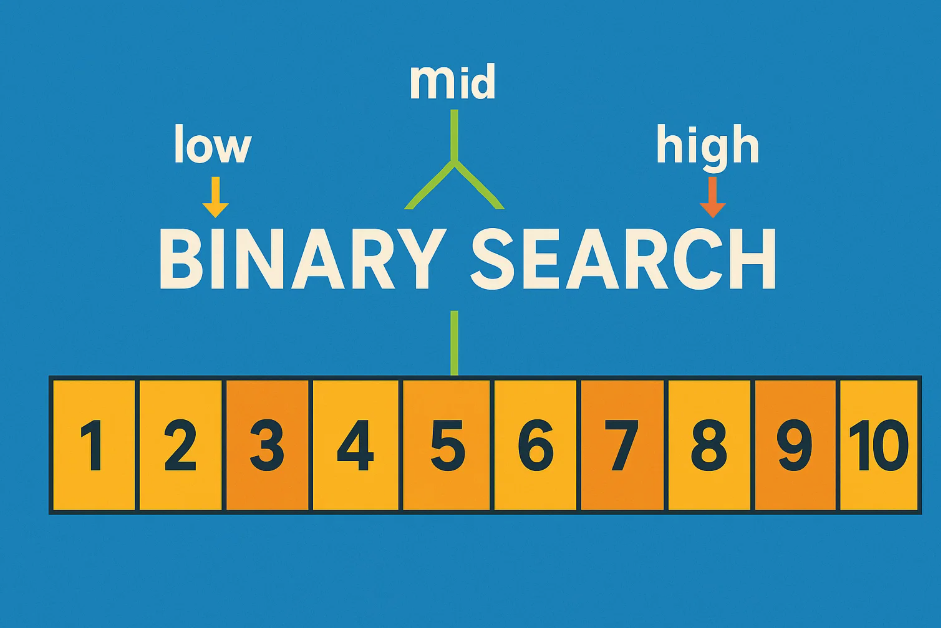

Read moreA Simple and Clear Induction and Summary of Binary Search

Read more